Joint characterization of two random variables

Two random variables can be jointly characterized using the 'Joint Probability Density Function' or the 'Joint Cumulative Distribution Function', defined as follows:

If the two variables are independent, the joint PDF is the product of marginal probability density functions:

This reasoning can be extended to sets of `n’ random variables, concluding that the joint PDF of a set of random variables is the product of the marginal probability density functions.

Example: Find the PDF of the random variable resulting from the sum of two independent random variables, if the marginal PDF of the variables are known.

This is applied to the characterization of noise which results from adding two different kind of interferences. The objective is to find the PDF of random variable z, as a function of the PDFs of the random variables x and y.

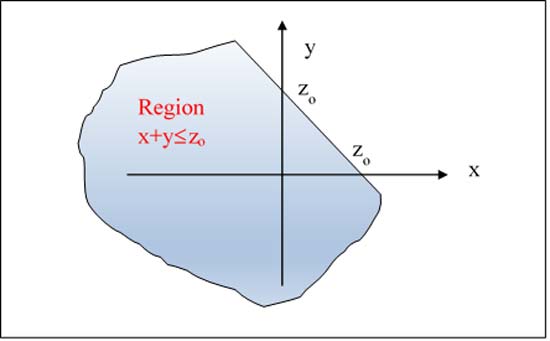

The procedure to be applied consists in finding the cumulative distribution function of z:

Figure 4 Graphical representation of integration area to find the CDF of the sum of two random variables

If both random variables are independent:

The PDF of the sum of two independent random variables is equal to the CONVOLUTION of the marginal PDFs. An important consequence is the fact that the sum of normal or Gaussian random variables is always Gaussian, since the convolution of Gaussian functions is also Gaussian.

An important question arises: Why don’t we use the Fourier transform, so that the Fourier transform of the desired PDF is obtained as the product of the marginal PDFs Fourier transforms?

- Characteristic Function: It is defined as the Fourier transform of the probability density function.